As developers and researchers continue to showcase LLMs’ strengths and work around weaknesses, AI's immediate opportunities and threats become more tangible. The debate around AGI’s existential risk heated up last week but, for the foreseeable future at least, AI-risk will primarily be a “AI-augmented human” risk. Last week, the public also realized that hardware could be a serious bottleneck as explained in a previous wrap-up, which may slow AI-development down, for better or for worse.

A long week-end inevitably led to a long wrap-up - apologies in advance - let's hope that you'll end-up learning a lot more here today. The long week-end was an opportunity to spend more time actually testing and building around available tools, which is the fun part, so we may stick to a longer format but on a less-frequent basis.

AI-Agents, as close as you can get from AGI with current tech

The emergence of Agentic AI, the term for integrated solutions combining several tools including at least one AI-Chatbot as entry point, was the trending theme last week: the top-three repositories on Github were dedicated to AI-Agents. These programs operate by setting a list of tasks to achieve a prompted objective, then execute these tasks one by one (building tools and/or reaching out to third-parties), classify information and remember it (i.e learn) and use this knowledge for future tasks or future objectives.

This is as close as you can get from AGI today, almost disturbing when human agents are simulated : check out this paper, which may describe a modern “Game of Life” situation in the sense that it opens the door to a brand new field of research, half-game half-science.

Even though it is possible to build your own agent using frameworks like Langchain or open-source tools from HuggingFace (amongst others) some turnkey solutions have already started to baffle the world by showing how AI can be motivated, think and execute a plan.

AutoGPT and BabyAGI are two of these platforms, both are open-source but you need an OpenAI API key, and they are not issued so frequently these days. Microsoft are working on their own solution, HuggingGPT / Microsoft Jarvis (presented in this paper) and an OpenAi solution is also expected soon. We hope to give a complete overview of these solutions once we have played around with them.

Twitter leveraging its gatekeeper position

If you use your Twitter account to authenticate on third party websites using Open Authorization (i.e not using dedicated logins/passwords for these websites), you might want to reconsider. Last week, Twitter and Substack seriously clashed, blaming each other for inappropriate practices towards a competitor. Substack is a newsletter platform where users can post long blogs and can monetize this content. The overlap in userbase between Twitter and Substack is very large and typical users would just share on Twitter an excerpt of their latest blog with a link to Substack. Twitter’s recent moves, e.g allowing longer Tweets and focus on monetization, impaired the symbiotic relationship. Elon Musk also claimed that Substack was extracting a lot of data via the Twitter API to build a direct competitor called Notes (this part is not completely unsubstantiated). That led Twitter to put anything containing “substack” on a blacklist : links could not be liked nor shared, searching the keyword “substack” would not return any result… and some users using Open Authorization reported that they could no longer access their Substack account. Unimpressed by Mastodon after the last Twitter “exodus” last year, users sought another alternative and Bluesky, backed by Jack Dorsey, appeared to be a good candidate. But it could not cope with the volume and crashed on Monday.

Regardless of any reasonable grounds for the decision, Twitter's demonstration of power could be seen as a textbook example of gatekeeping with a direct consequences for users – something the European Union has historically been very sensitive to. It wouldn’t be surprising to see European regulators investigate this specific case which, unfortunately for Twitter, was coincidental with a bug that made information shared in [private] circles visible to all users.

The US worry about AI-Doom, Europe worries about data privacy

Europe is known for its relatively strict stance towards Tech giants, an approach that is sometimes perceived as anti-innovation. Overthinking regulation can have drawbacks, in particular in Tech where companies typically move faster than regulators. And AI is a good example : the European Commission has already spent years thinking about AI regulation but LLMs going mainstream means that a lot of things need revisiting. However, it is not expected to be a paradigm shift for the General Data Protection Regulation (GDPR) framework and this is the background of the ChatGPT ban in Italy - something Germany is said to consider too.

The core of the issue is not that OpenAi is not able to tell users which data they hold, in order to be able to delete them entirely or to hand them over to ensure portability (a key requirement of GDPR that is often ignored and which may also be relevant in the recent Twitter/Substack case). Italy just pointed out the “absence of any legal basis that justifies the massive collection and storage of personal data" to "train" the chatbot.

Europe did not wait for the digital age to be concerned about data privacy and GDPR find its roots in the realization, after WWII, that logging user data can have catastrophic consequences, including industrial-scale murders when “data” meant religion, political views or sexual orientation. In France for example, the CNIL was established in the late 70’s to regulate data-handling (the “I” in CNIL) with the view to protect individuals’ freedom (the “L” in CNIL) after a public outrage against an initiative of the French government to create a centralized database of citizens. That’s important background to understand that GDPR is a citizen-focussed framework: it is an absolute requirement (with few terrorism-related caveats) regardless of size, nature or activity, that aims at protecting individuals against States/Governments as much as against organizations (this Wired story on Chinese surveillance equipment illustrates why it could be a good idea).

It is sometimes difficult to understand GDPR outside Europe because the rare data regulations are mostly consumer-focussed*, i.e using suppliers/service-providers as a starting point. That’s the case, for example, of the California Consumer Privacy Act (2018) that does not apply to nonprofit organizations or government agencies, and only applies to businesses that have revenues above $25m or handle information of more than 100k California residents or derive more than 50% of their revenues from selling California residents’ personal information.

Generative AI returns one likely output given context, not an average and not a range

For most concepts, the European meaning and the American meaning are almost interchangeable. The American and European cultures are close for historical reasons, yet there can be slight but meaningful differences, as evidenced by the fact that data-ownership is embedded in the concept of freedom in Europe but not in the US.

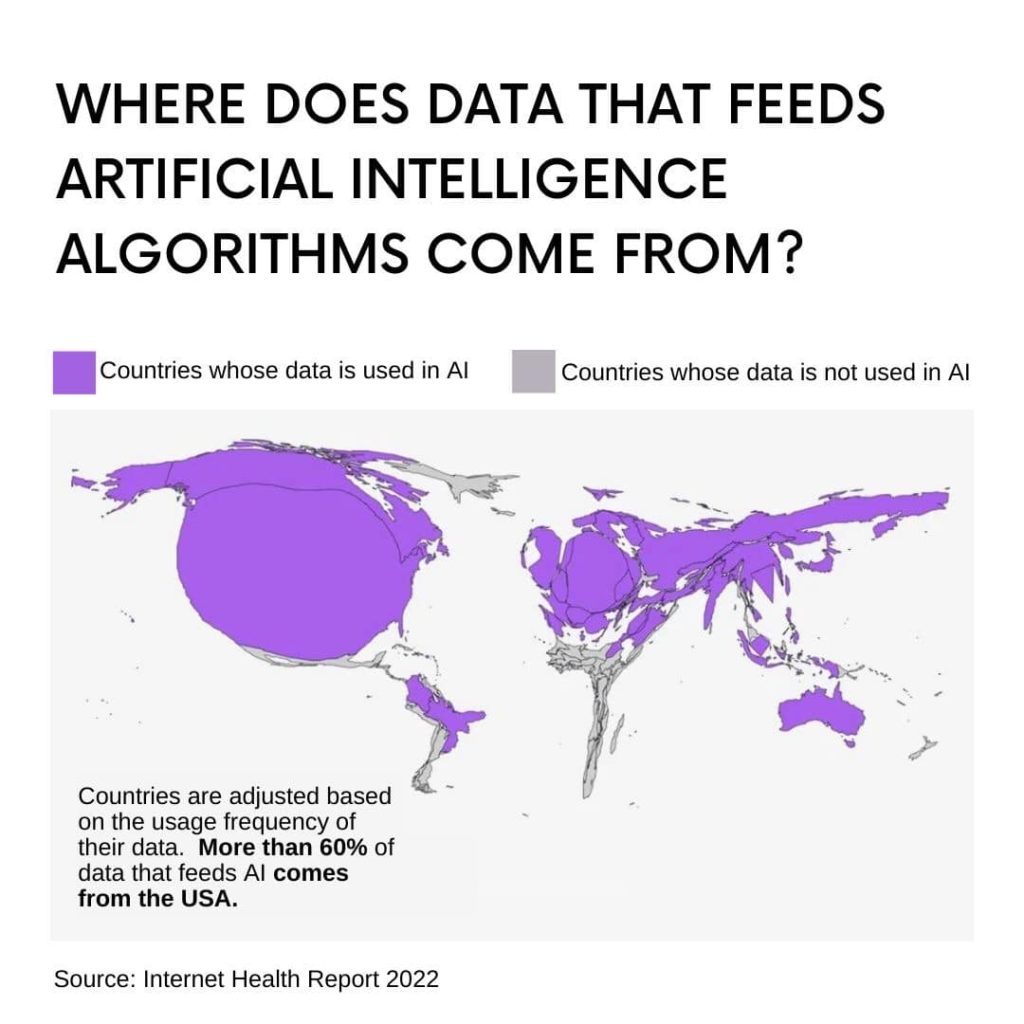

It’s hard to see how these small cultural differences will survive the upcoming technological era if generative AI is used as search engines or content generation tools: generative AI predicts a highly likely word or pixel given a certain context rather than offering a range of options or even reflecting an “average” view. If you don’t provide a clear context, you will receive the most likely view… which will reflect an American bias because of the data it has been trained on.

We used countries for illustrative purpose, but the reasoning applies at any scale : the mainstream view will always be pushed to anyone too lazy to add context. As any LLM carries cultural biases by construction, it would be interesting to see more models being trained, and agents from different models/cultures interact. In the meantime and so long as LLMs are the relevant AI technology, you can only hope that non-mainstream views continue to be shared on the open internet and are picked up in future LLM trainings. The feedback loop is a real threat for diversity in case of generative AI.

Intellectual Property from a legal perspective

This also means that people must get comfortable with the idea that any data posted on the internet will be used to train models. The question of intellectual property is only starting to unfold in the quickly evolving space.

Trademarks seem reasonably well protected even though enforcing the protection seems unrealistic owing to the potential volume of cases now that AI can generate content in seconds. Credible solutions to protect individuals image rights or voice rights are yet to be found.

Very likely unauthorized.

For unregistered content used as training data, high profile controversies have already come-up around coding abilities (Github Co-Pilot trained on all open/source code on Github ; the Google-Replit partnership at the end of March 2023 presents a similar profile), and for image generation where models could “replicate” specific Artist’s styles. In Japan, Artist protection turned into a witch-hunt for Manga fans at the end of last year. Even for platforms that claim to train their models on licensed images, it is not entirely clear that the licensing included the use for training (for that reason, we removed a mention in last week’s post that Abobe Firefly was the go-to platform for commercial uses). There are still a lot of moving parts in the notion of “fair use” when it comes to model inputs.

As far as outputs are concerned, law makers probably need to digest the consequences of recent improvements in generative technologies. The issue of images probably first comes to mind, but code may be the elephant in the room if Github Co-pilot actually writes 46% of all code on Github. For the moment, most jurisdictions would consider that anything generated by an algorithm can’t be protected. In the US, the case of Kris Kashtanova, reveals the extent of the gray area: Kashtanova initially managed to register an 18-page comic book largely generated with Midjourney, after proving substantial human input. However, when the Copyright Office understood the implication of the use of Midjourney, they reissued a registration without protection of the images (only texts and arrangement). They argued that it is impossible to predict what would come out of Midjourney (remember, just a likely pixel given context) so human input could hardly be demonstrated.

And things are just getting more complicated as the technology improves: last week, Midjourney released the “/describe” functionality which allows any user to upload an image, find out a relevant prompt corresponding to that image, and then re-generate a similar image on the platform. Same but different ; without training or substantial human inputs.

How likely is it that two LLMs speak the same dialect if they were built and trained independently?

Image generation is not the only area where users have found ways to maximize their productivity last week. An interesting example was the discovery that GPT-4 had impressive stenographic capabilities effectively allowing to compress messages, albeit in a slightly lossy way. The ramifications could be far reaching as it could give insights into the learning capabilities of these models. But the most surprising was the claim that Google’s Bard was able to decode messages encoded by GPT4 (did not replicate ourselves). One would not expect different models, trained on different data, to encode information in the exact same way.

Follow-ups on last week’s stories

Last week, we used the Balenciaga memes (living on) to show that new technology started to permeate Pop culture and tools were now accessible to build all kind of deepfakes along a spectrum ranging from creepy to funny to malicious. On the creepy side of things, virtual resuscitation let people “discuss” with family members or celebrities. There is no point spelling out what available tools mean in terms of malicious use-cases but you can check-out the Twitter thread below for a comprehensive overview of existing video solutions.

Last week, AI’s hardware bottleneck and its geopolitical dimension became more real for many people. The escalation of the Chinese threat over Taiwan, combined with reports that AWS, Microsoft, Google and Oracle could limit GPU access amid surge in demand, and big plans unveiled by Anthropic and Microsoft triggered a strong FOMO sentiment among people who know what to do with these chips. From an external perspective, it is difficult to gauge if this was justified or if it is just another bubble akin to the NFT gold rush 2 years ago. Speculators can nonetheless make a safe bet: as large datacenters require huge volumes of water for cooling**, this resource is going to become more valuable, even if GPUs turn-out to be a non-issue.