LeftoverLocals: Listening to LLM responses through leaked GPU local memory

Date : 2024-01-16

Description

This summary was drafted with mixtral-8x7b-instruct-v0.1.Q5_K_M.gguf

In the Trail of Bits blog, Tyler Sorensen and Heidy Khlaaf discuss LeftoverLocals, a vulnerability that allows an attacker to recover data from GPU local memory created by another process on Apple, Qualcomm, AMD, and Imagination GPUs. This vulnerability is particularly significant for LLMs and ML models run on impacted GPU platforms, as it can leak local memory optimized for GPU use, potentially reconstructing LLM responses with high precision.

Read article here

Recently on :

Information Processing | Computing

Security | Surveillance | Privacy

WEB - 2024-12-30

Fine-tune ModernBERT for text classification using synthetic data

David Berenstein explains how to finetune a ModernBERT model for text classification on a synthetic dataset generated from argi...

WEB - 2024-12-25

Fine-tune classifier with ModernBERT in 2025

In this blog post Philipp Schmid explains how to fine-tune ModernBERT, a refreshed version of BERT models, with 8192 token cont...

WEB - 2024-12-18

MordernBERT, finally a replacement for BERT

6 years after the release of BERT, answer.ai introduce ModernBERT, bringing modern model optimizations to encoder-only models a...

WEB - 2024-03-04

Nvidia bans using translation layers for CUDA software | Tom's Hardware

Tom's Hardware - Nvidia has banned running CUDA-based software on other hardware platforms using translation layers in its lice...

WEB - 2024-02-21

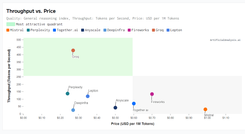

Groq Inference Tokenomics: Speed, But At What Cost? | Semianalysis

Semianalysis - Groq, an AI hardware startup, has been making waves with their impressive demos showcasing Mistral Mixtral 8x7b ...